[latexpage]

Testing has been quite a significant subject during this pandemic. Around 30.000 people a day are being tested in the Netherlands alone, so it is quite important that such a test can accurately predict whether someone actually has the disease. However, an accurate test is not always as predictive as you would like. This phenomenon is also known as “the medical test paradox”.

Bayes’ rule

The medical test paradox is an example that is quite often used to introduce Bayes’ rule. In Bayesian statistics, the interpretation of probability is expressed as a degree of belief. It allows, for example, that the risk to an individual being exposed to covid-19 to be assessed more accurately. This is because Bayes’ rule conditions on related events that could change the probabilities. This will become more clear when we write it as a formula:

\begin{equation*}

P(Sick|Positive) = \frac{P(Positive|Sick)P(Sick)}{P(Positive)}.

\end{equation*}

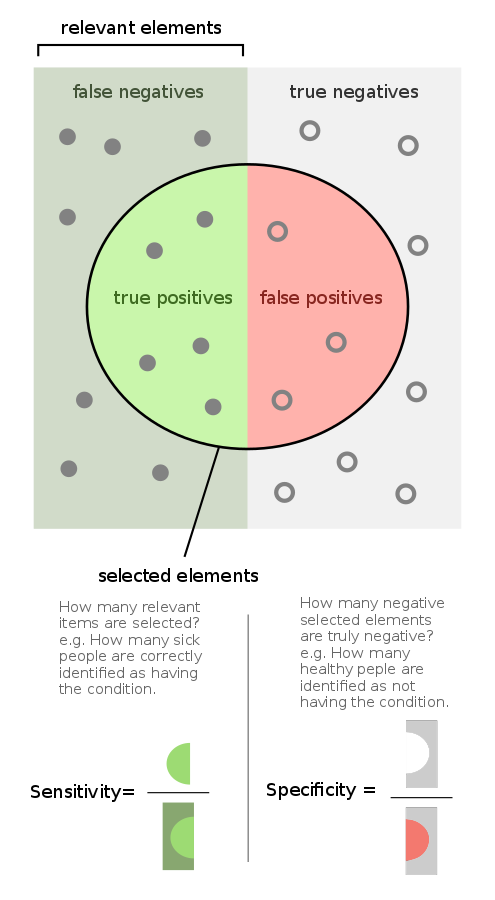

Where $P(Sick | Positive)$ is the probability that someone who tested positive actually has the disease. $P(Positive | Sick)$ or the probability that someone who is sick actually tests positive is also known as the True Positive Rate (TPR). This is also called the sensitivity of our test and plays an important role in the predictive accuracy of the test. Another important number is the True Negative Rate ([expand title = TNR] A true negative probability can be expressed as such: $P(Negative | Not \: Sick)$ [/expand]), also called the specificity of a test. The specificity and sensitivity are important figures to assess the actual predictability of a test. In the figure below this is illustrated with an example from a sample.

The paradox

So why is this called a paradox? First of all, for the sake of simplicity, let us assume that covid-19 has a prevalence of 1%. This implies that 1% of the population is found to be affected by the virus. Then, taking a sample of 1000 people, we have that 10 people actually have the virus, so 990 people are healthy. After we tested these people we have found that from the 10 people that actually have the virus, there is one tested negative. This means that there is one false negative in the test. Furthermore, from the remainder of the sample that does not have the virus, 89 people are tested positive or we can simply say that there are 89 false positives. The other 901 people are tested negative correctly.

As a consequence, assuming we do not know anything else about the patient other than the fact that he or she tested positive, we know that he or she either belongs to the group of 89 false positives or the 9 true positives. Hence, the probability of having the disease given the test result is: $P(Covid | Positive) = \frac{9}{89+9} \approx \frac{1}{11}$. This is also called the Positive Predictive Value (PPV) in medical terms. However, recall the sensitivity and specificity, which give the accuracy of a test. In our example, the sensitivity of the test is 90% $\left(\frac{9}{10}\right)$ and the specificity around 91% $\left(\frac{901}{990}\right)$. The paradox here is that the test gives correct results for 90% of the people who take it, but only 1 in 11 patients actually have the disease when their test is positive.

This is a bit of a problem, especially during a global pandemic. Of all the places where math seems to be counter-intuitive, this is a place where it matters the most. One example where this paradox came to light, was at a statistics seminar given by Gerd Gigerenzer to medical doctors. The doctors were given a case of a woman who tested positive for breast cancer and it was given that the prevalence was 1%, the sensitivity 90% and the specificity 91%, which are the same numbers as in our covid example. The question the doctors were given was: “How many women who test positive actually have breast cancer?”. Moreover, in the case described to the doctors, nothing was known about the patient’s symptoms only that she tested positive. They had four options to choose from: A) 9 in 10, B) 8 in 10, C) 1 in 10 and D) 1 in 100. In one of the sessions during the seminar, over half of the doctors present chose answer A, while the correct answer is C. This clearly shows that even doctors find it counter-intuitive that a test with such a high accuracy can give a very low predictive value.

Bayesian solution

A more useful way of actually understanding what tests do, is that they update your chances of having the disease instead of receiving a deterministic chance. This can be explained further using Bayesian terms. Recall that in our example we had that the prevalence was 1%, which means that 1 in 100 people will have the disease. This is called the prior probability, which is simply the probability of having the disease before taking the test. When we see a positive test, this degree of belief will be updated by the so-called Bayes factor. This factor has the interpretation of how much more likely it is that someone with the disease tests positive than someone without the disease. To put this into formulas:

\begin{equation*}

\text{Bayes factor} = \frac{P(Positive | Covid)}{P(Positive | No \: Covid)}.

\end{equation*}

Notice that $P(Positive | Covid)$ is the sensitivity and $P(Positive | No \: Covid)$ is the [expand title= FPR] The FPR is the false positive rate of a test, which is defined as: $\text{FPR} = (1 – \text{sensitivity})*100\%$ [/expand] of the test. Hence, from our example, we would have a Bayesian factor of $\frac{90\%}{9\%} = 10$. Then the so-called posterior, which can be interpreted as the PPV, is equal to the prior multiplied by the Bayesian factor. So in our example, this would have been $10*\frac{1}{100} = 0.1$, which is very close to the answer we got when we used a sample population.

However, using the Bayesian factor does not always work with higher priors. For example, when the prior would have been $10\%$ we would get a posterior of 100%, which is not the correct answer. There is a way to tackle this problem and the key is to replace probabilities with odds. Odds simply take the ratio of all positive cases against the negative ones. For example, a 1:1 odds means a 50% probability and a 1:4 odds means a 20% probability. So if we express our priors as odds instead of probabilities we can always multiply with the Bayesian factor to get our correct posterior. In the example where our prior is 10%, this would be the same as a 1:9 odds. Then multiplying by the Bayesian factor 10 we get a posterior of a 10:9 odds, which is simply a probability of $\frac{10}{19} \approx 53\%$. In order to get this probability, I challenge you to redo the sample population with the same sensitivity and specificity but change the prevalence to 10%. If the prior was 1%, we would have a 1:99 odds. Then multiplying with the Bayesian factor would result in a posterior of 10:99, which is simply a probability of $\frac{10}{109} \approx \frac{1}{11}$. This is also what we found earlier using the sample population.

Covid tests

A study has shown that the sensitivity of the RT-PCR test, which is the most commonly used test for covid-19, is a mere 80% in its best case. This was found based on a sample of 1330 people. This sample was tested over several days to see at which point in time the test is optimal after the day of infection. Luckily, its specificity is near 100%, which is good news. However, we have shown in this article that although these numbers seem to be accurate enough the actual positive predictive value would still only be around 45% (taking a specificity of 99% and prevalence of 1%).

This is why doctors need to get a negative test three times in a row before they are allowed to go back to work when they have had a positive test and are coming out of quarantine. As getting a negative test multiple times in a row significantly increases the PPV, which means that you can be more certain of your actual outcome. Hopefully, you will never have to get tested, but now you know some statistics behind all the testing that is going on in the world.

References

Kucirka, L. M., Lauer, S. A., Laeyendecker, O., Boon, D., & Lessler, J. (2020). Variation in False-Negative Rate of Reverse Transcriptase Polymerase Chain Reaction–Based SARS-CoV-2 Tests by Time Since Exposure. Annals of Internal Medicine, 173(4), 262–267. Link

3Blue1Brown (2020). The medical test paradox: Can redesigning Bayes rule help? Link

This article is written by Sam Ansari